In the realm of business, where success hinges on the ability to build relationships and drive results, Charles Trostle shines as an experienced professional with a proven track record. With over 30 years of industry experience, Charles has honed his skills in software development and project management, making him a trusted expert in the field. What sets Charles apart is his unwavering commitment to team building and fostering collaboration, attributes that have consistently delivered tangible results.

Throughout his career, Charles has demonstrated a remarkable aptitude for bringing teams together to achieve common goals. His approach goes beyond just delivering products; it's about creating a collaborative environment where individuals can thrive, driving innovation and efficiency. As an Executive Product Owner, Charles leverages his unique strength in team building to not only meet business requirements but also elevate the performance of his teams, making him a valuable asset in any endeavor.

Charles Trostle - Certifications

I believe in devising strategies that lead to measurably improved evolutionary change to products, systems, processes. I serve the customers, clients and teams I am engaged with and believe the most valuable resource of any enterprise is its people.

The most valuable resource of any enterprise is its people.

Delivering high-quality solutions increase business value.

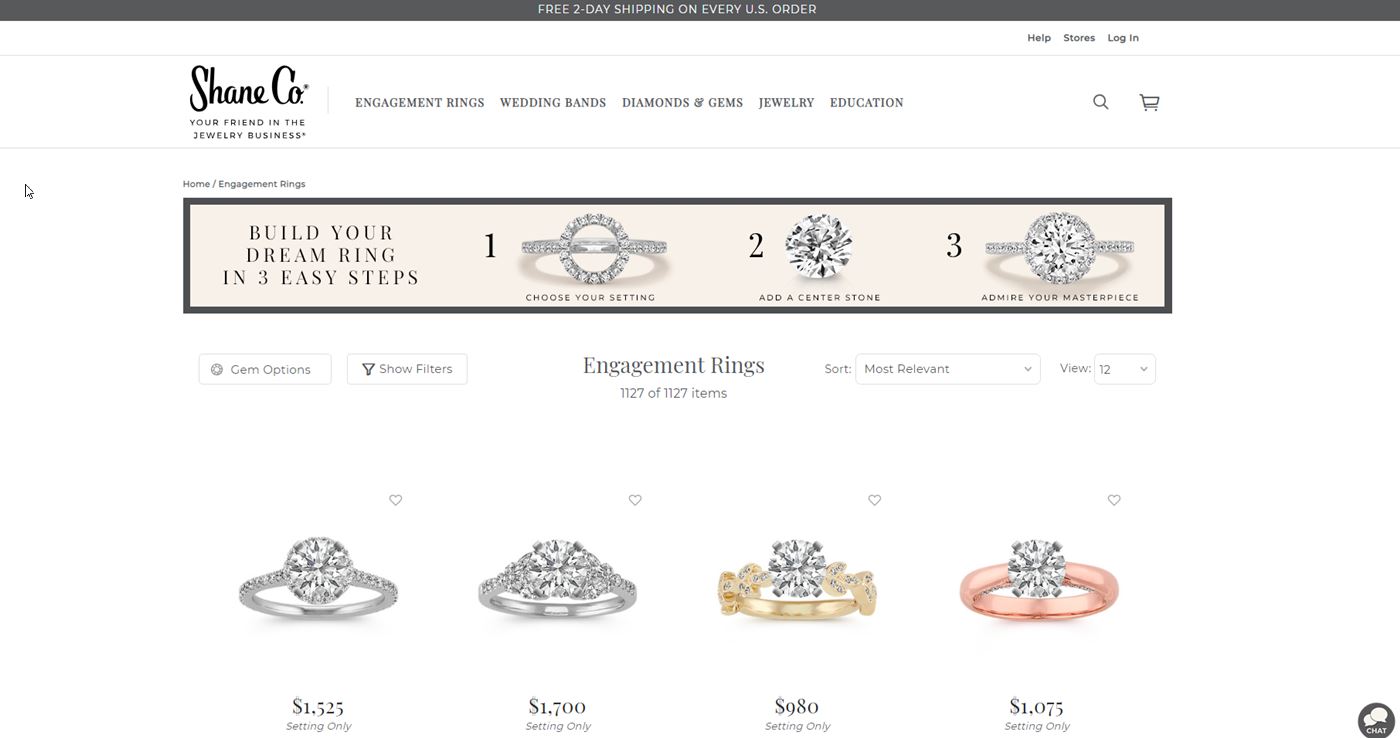

Expertise in the art of challenging design and user experience teams, leveraging industry best practices and over 20 years of experience in product development, always with the customer's best interests in mind.

Advocating for the adoption of Agile principles and industry-standard communication tools within organizations, serving in SCRUM roles such as Scrum Master, Product Owner, and team member. Understanding the importance of top-down adoption, effective ceremonies, and coaching teams for project success.

Expertise in utilizing agile, waterfall, and hybrid methodologies for new product/solution development, updates/re-platforming of existing systems, and maintenance activities.

Identifying market needs and opportunities aligned with business objectives, crafting a clear vision, and developing realistic product roadmaps in Agile environments. Certified as a Scrum Product Owner by the SCRUM Alliance.

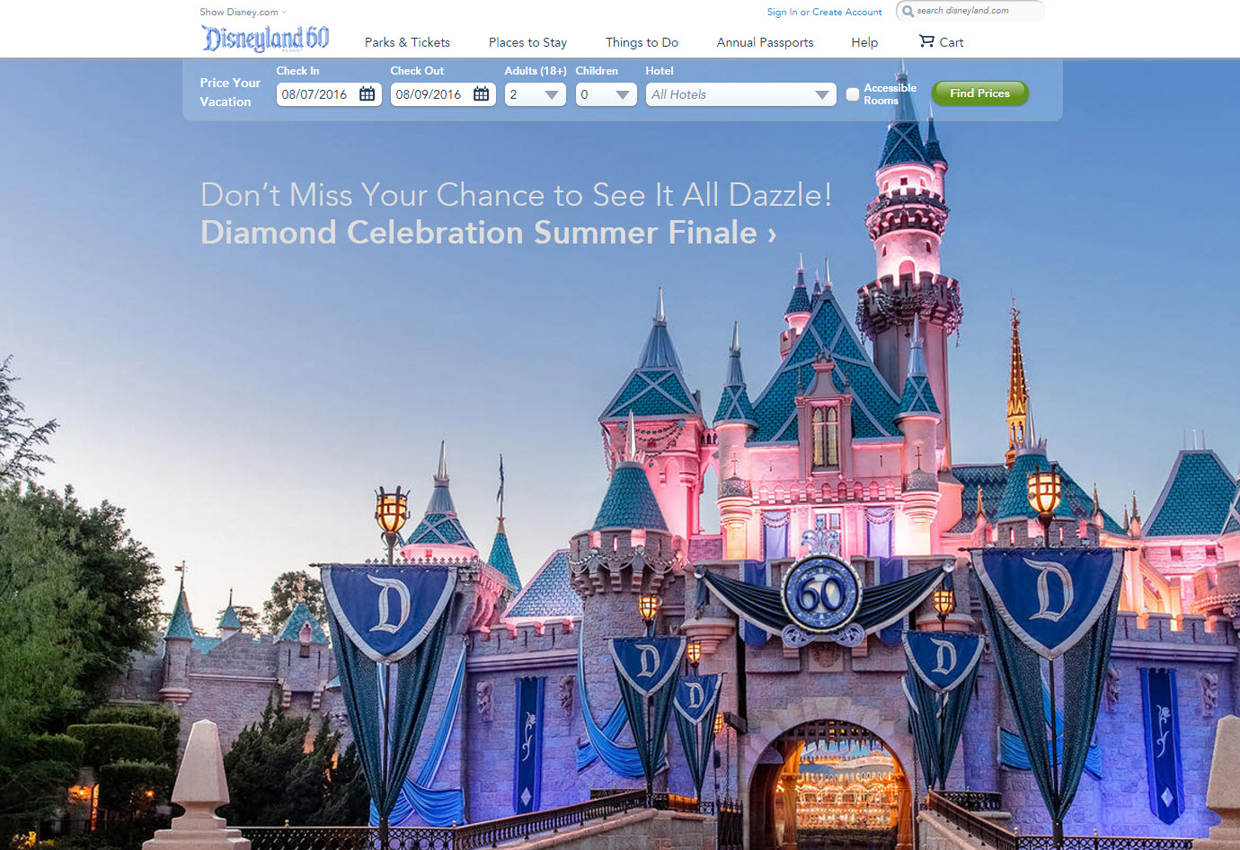

Proficient in managing teams responsible for designing and delivering complex Java-based booking engines, refining purchasing processes, optimizing workflows, ultimately driving higher conversion rates and revenue. Experienced on multiple platforms, including custom Java, Hybris, and Demandware.

Continuously enhancing organizational performance begins with adopting a holistic view and approach to manage interconnected projects. This involves horizontal management within the organization, orchestrating teams, identifying best practices, and eliminating non-value-add processes and artifacts.

Proficient in leading diverse teams, embracing servant leadership principles, emphasizing transparency, and fostering collaboration with global, distributed teams.

Proficient in recruiting and retaining top project management, analysts, and technology talent to support digital initiatives. A natural mentor to staff and resources both within and outside the organization.

Leadership and management of diverse technology teams responsible for building, maintaining, and securing on-premises and cloud hardware and services. Expertise in defining disaster recovery protocols, security standards, internal support standards, SLAs, ADA and PKI compliance standards.

Visionary approach to long-term strategy development, crafting realistic product roadmaps aligned with initiatives, and optimizing delivery in multi-departmental, multi-disciplined environments.

Proficient in leading and facilitating change management initiatives within organizations. Successfully navigate transitions, mergers, and technological advancements while ensuring smooth adoption by teams and stakeholders. Adept at creating change management strategies that minimize resistance and maximize successful outcomes.

Adept at leading diverse teams by embracing a servant leadership philosophy with emphasis on transparency and collaboration with global, distributed teams.

Expert in conducting ROI modeling, annual resource allocation, and budget planning to support large teams (400+). Responsible for managing personnel budgets exceeding $4 million, project budgets surpassing $6 million, with a sign-off authority of $500k. Prioritizing ruthlessly to align with overall business goals and objectives.

Experienced in proactive risk management, emphasizing identification, assessment, and mitigation of potential threats. Cultivates a culture of risk awareness, ensuring organizational objectives are safeguarded.

Proficient in implementing and managing Customer Relationship Management (CRM) systems to enhance customer interactions, streamline sales and marketing processes, and drive business growth. Expertise in leveraging CRM data to make informed strategic decisions and deliver exceptional customer experiences.

Software Engineer >> Project Manager >> Program Manager >> Engagement Lead >> Product Owner >> Executive

NHL GAMECENTER LIVE APP

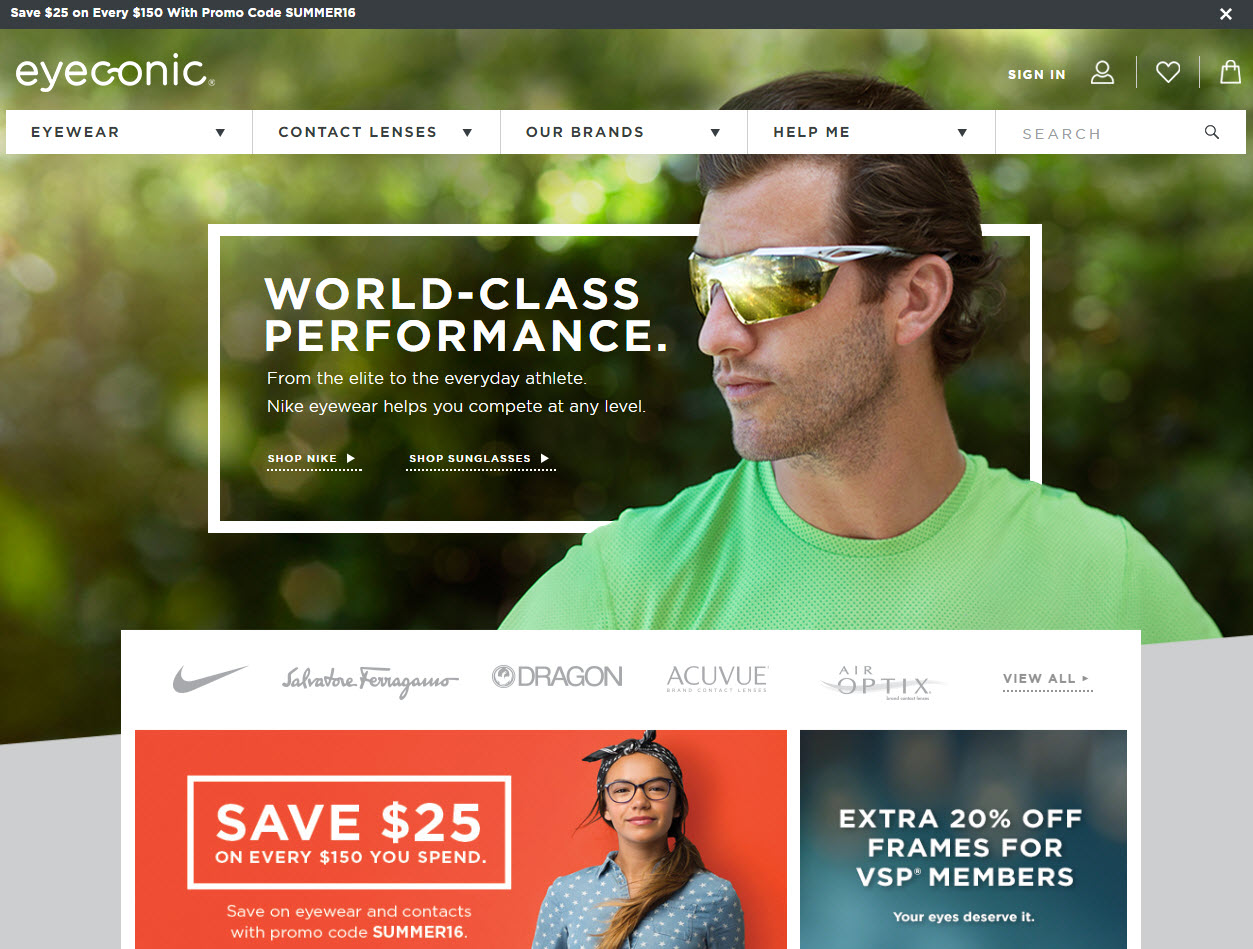

VSP | EYECONIC.COM

My last name is a bit of a challenge, it sounds like trestle but with a long “ō” [Tros·tle] /ˈTrosəl/